I was initially inspired to share my thoughts upon reading a blog post titled Governance of Superintelligence written by some of the cofounders of OpenAI. The post was published on May 22, 2023 and detailed some of the impacts and challenges of Superintelligence and highlighted the need for coordination, oversight, and safety measures in its development. But what cought my eye was that there was a time frame provided.

“Given the picture as we see it now, it’s conceivable that within the next ten years, AI systems will exceed expert skill level in most domains, and carry out as much productive activity as one of today’s largest corporations.”

— Sam Altman, Greg Brockman, Ilya Sutskever

With the current rate of progress many researchers in the field agree with this statement and believe that Super intelligence is a likely possability within the next decade and AGI even sooner.

If true, there is a fundamental paradigm shift inbound on how our world operates on almost every level in the next 10 years, and I feel an increasing need for public to know more about this technology and its impact on our future.

So let us start off by first trying to answer, what is AGI and Superintelligence ?

Introduction

AI systems exist in 3 different fundamental categories, Artificial Narrow Intelligence(ANI), Artificial General Intelligence(AGI) and Artificial Super Intelligence(ASI).

Every AI model that humans have created thus far is classified under ANI otherwise known as Weak AI. These systems are designed and trained for a specific task or a narrow set of tasks but lack the broad cognitive abilities associated with human intelligence. Some ANI systems you might be familier with include Google Translate, Spam filters in your Email, recommender systems on websites such as Amazon, Instagram, Youtube etc.

Now, defining AGI is a bit more dubious. OpenAI define AGI as “highly autonomous systems that outperform humans at most economically valuable work”. It is important to note that a formal or universal agreed upon definition for AGI does not exist. This is due to the fact we have limited understanding of how our own intelligence works and how we define and measure intelligence. Additionally, the benchmark of how ‘generally intelligent’ an AI sytem must be for it to classify under AGI is ambiguous, but we can still discuss the underlying principles that would point towards an AGI system.

For an intuitive understanding we can say, these are systems which demonstrate cognitive abilities(such as vision and audio understanding, natural language processing, problem-solving) on par with humans so that, if faced with an unfamiliar task, the system could find a solution. An AGI system has not been developed yet and is currently the leading area of research in the field.

Lastly, Superintelligence refers to a level of intelligence that surpasses the cognitive abilities of humans across all domains and tasks. It represents an AI system that is not only highly advanced but also significantly more intelligent than the most capable human minds. Superintelligence possesses the potential to solve complex problems, acquire new knowledge, and surpass human expertise in virtually every field.

The arrival AGI an Superintelligence will undoubtedly have a major impact on the future of humanity, namly in its economic impact, job disruption, ethical dillemas, scientific breakthroughs and more. In this article we will go through the current state of AGI development, the organisations in the race to create it and its impact on humanity along with some personal abstract ideas about this technology that I find interesting.

I will be breaking this post into the following parts

1. Pre-AGI Landscape

Technological breakthroughs have long been part of human history, from the printing press in 1440, which revolutionised the production of books and other printed materials, to the creation of the Internet in the 1960s, which connected people and information globally. These innovations initially led to job losses in some sectors, but ultimately ended up creating even more fulfilling jobs, both in quantity and quality. This was because they had one fundamental trait in common: they empowered human beings, meaning these inventions assisted human beings in executing as task better or more efficiently, and this is exactly where the creation AGI differs in its nature from all previous inventions throughout history.

Systems approaching AGI are fundamentally different in mainly two ways. Firstly, AGI will be the first invention in history that can make decisions by itself. All previous tools could not make their own decisions, which is why they empowered humans. For example, an autonomous weapon system can decide on its own who to shoot rather than the invention of a gun, which allowed humans to kill more effectively. Secondly, AI is the first invention that can create new ideas. The printing press or the internet could distribute our ideas but couldn’t create the ideas themselves. These two properties indicate that instead of empowering humans, AI takes power away from them. This shows that as AI gets more and more intelligent and as we approach AGI and superintelligence, there is a trade-off between how intelligent an AI system is and the amount of power it takes away from us.

AI is a rapidly evolving field, the rate of progress has surprised a lot of researchers. Many believed that we would not be able to hold nuanced conversations with intelligent machines in our lifetimes, but breakthroughs with Large Language Models (LLM) such as ChatGPT, Claude, Bard, etc. have proven otherwise.

In fact, due to concerns over the rapid development of increasingly powerful AI, in May 2023, the OpenAI team, along with CEO Sam Altman, travelled to 25 cities across six continents, and countries including India, South Korea, Japan, Singapore, etc., to speak with users, developers, policymakers, and the public and hear about each community’s priorities for AI development. Sam Altman met with world leaders to discuss the importance of collaboration between governments and companies like OpenAI to establish effective guardrails for the enormous impact that AI is bound to bring in the future.

Due to the rapidly evolving nature of this technology, I would prefer to talk about the organisations and research labs currently pursuing building AGI rather than the specific technologies that have been made thus far, as the latest technology is likely to have changed by the time you are reading this article.

So let’s discuss some of the major competitors in the AGI race.

Organisations Pursuing AGI

1. OpenAI

OpenAI is a capped-profit organisation founded in 2015 whose goal is to build and ensure AGI systems benefit all of humanity, as stated by their charter. They are responsible for the creation of popular AI tools such as ChatGPT and DALL-E and are also considered the frontrunners in the race for the development of AGI. In 2019, Microsoft invested $1 billion in OpenAI to fund and support its AI research efforts. As part of the deal, OpenAI transitioned from a non-profit to a ‘capped-profit’ structure, allowing more flexibility to raise funds from investors. In return, Microsoft was granted exclusive licencing rights to GPT-3 and other OpenAI technologies developed over the course of the partnership. Microsoft has since integrated GPT-3 and DALL-E 2 into various products. In addition, Microsoft provides OpenAI with Azure cloud computing resources and services to run its compute-intensive AI training. The partnership was further expanded with Microsoft investing another $10 billion in OpenAI in January 2023.

The Microsoft research team published a detailed research paper titled Sparks of Artificial General Intelligence: Early Experiments with GPT-4 in March 2023, which shed light on the capabilities and implications of GPT-4 LLM and its role as an early version of an AGI system. It also provided insights into the challenges and future prospects of AGI research.

2. Anthropic

Anthropic is an AI safety startup founded in 2021 focused on developing beneficial AGI. They are led by Dario Amodei, Daniela Amodei, Tom Brown, and others previously from OpenAI. Many researchers had left OpenAI due to directional differences, specifically regarding OpenAI’s ventures with Microsoft in 2019. In September 2023, Amazon announced a partnership, with Amazon becoming a minority stakeholder by investing up to $4 billion. As part of the deal, Anthropic would use Amazon Web Services (AWS) as its primary cloud provider and planned to make its AI models available to AWS customers. The next month, Google invested $500 million in Anthropic and committed to an additional $1.5 billion over time. Anthropic is primarily an AI safety research lab but is also known for its large language model named Claude, which has demonstrated performance close to GPT-4.

3. Google DeepMind

In 2011, Google started an internal Google AI research project focused on deep learning and neural networks called Google Brain. Key members included Andrew Ng, Jeff Dean, and Greg Corrado. In 2013, Google acquired DeepMind Technologies, an AI startup founded in 2010 by Demis Hassabis, Shane Legg, and others. DeepMind had similar goals of advancing towards human-level artificial general intelligence. In April 2023, Google Brain merged with Google’s sister company, DeepMind, to form Google DeepMind, as part of the company’s continued efforts to accelerate work on AI.

Deepmind is known for its AlphaGo programme, which defeated the world champion in the game of Go in 2017, and AlphaZero, which defeated Stockfish, the best AI in the game of chess in 2017. The research team has also made significant contributions to the field, most notably the Transformer architecture in 2017, which is the underlying architecture behind all modern LLMs, and also created the deep learning framework TensorFlow. Recently, the Deepmind team has also released its Gemini LLM, which has claimed performances surpassing GPT-4.

4. Meta AI

Meta AI is the AI research organisation within Meta (formerly Facebook) focused on advancing AI capabilities and steering progress towards AGI. Meta is widely regarded as spearheading the open-source movement and has also built the most popular and widely used deep learning framework, PyTorch. Under the leadership of Chief AI Scientist Yann LeCun, Meta AI houses over 400 researchers across labs in Seattle, Pittsburgh, Tel Aviv, and beyond, working on initiatives spanning computer vision, natural language processing, recommendation systems, and more.

Meta AI first rose to prominence in AI circles through groundbreaking work on self-supervised learning techniques that allowed models to gain contextual knowledge from raw data rather than manual labeling. This enabled breakthroughs like the creation of the LLaMA language model, which matches state-of-the-art conversational AI capabilities. Meta AI has since open-sourced other models like OPT, Galactica, and No Language Left Behind to push research frontiers.

While renowned for innovations in language, vision, and robotics research, Meta AI also conducts fundamental work on aligning future AGI systems to human values. Initiatives like WebGPT showcase alignment techniques preventing models from generating toxic, biassed, or misleading content. And Meta’s Responsible AI team builds oversight tools like Fairness Flow, enabling external audits of model behavior. This proactive approach to addressing risks highlights Meta AI’s goal of democratising access to transformative AI that empowers users.

5. Mistral AI

Mistral AI is a French AI startup founded in 2022 with the mission “to spearhead the revolution of open models.” They aim to drive the AI revolution by developing open-source models that are on par with proprietary solutions. Mistral believes that generative AI will not only redefine our culture and daily lives but also reshape how we interact with machines and each other. The startup firmly believes that an open approach to generative AI is essential, emphasising the importance of community-backed model development as a means to combat censorship and bias in a technology that will shape our future.

As a first step, in September 2023, Mistral released Mistral-7B, an open-source 7 billion parameter language model. Mistral 7B outperformed all other available open models in its class on English language and code generation benchmarks.

Building on this, in December 2023, Mistral unveiled the Mixtral model, an 8x7B parameter mixture-of-experts model with 46.7 billion total parameters. Mixtral matched GPT-3.5’s performance while having 7x faster inference speed. On most language tasks, Mixtral outperforms even the 70B LLaMA 2 model developed by Meta.

The Mistral team’s abilities prompted large-scale investment. In April 2022, Mistral raised $57 million in seed funding from investors, this was followed by a $200 million Series A round in July 2022, led by Alphabet’s Gradient Ventures.Most recently, in January 2023, Mistral closed a massive $414 million Series B round led by top VC firms Andreessen Horowitz and Lightspeed Venture Partners. This round valued the company at $2 billion, putting them among the world’s most valuable AI startups.

6. xAI

xAI is an AI organisation founded in 2023 with the ambitious mission to “advance our collective understanding of the universe” and “to understand the true nature of the universe” through AI tools and research. The company is led by CEO Elon Musk along with a team of AI researchers from organizations like DeepMind, OpenAI, Google, Microsoft, Tesla, and the University of Toronto.

In November 2023, xAI unveiled Grok, an early prototype conversational AI assistant to answer questions on a wide range of topics with some wit and humour inspired by Musk’s favourite Book The Hitchhiker’s Guide to the Galaxy. Grok is powered by a LLM named Grok-1, which xAI claims achieves state-of-the-art performance surpassing GPT-3.5. Going forward, xAI is looking to enhance Grok’s reasoning skills, integrate formal verification methods for improved safety and reliability, and equip it with multimodal capabilities beyond just text.

2. Our World After AGI

A post-AGI world—what does this mean? Humans have effectively created a new species with intelligence on par with themselves. What does this mean for the future of humanity?

Although I have made a clear distinction in this article about a pre- and post-AGI worlds, it is important to note that most prominent researchers believe that there is no clear threshold point to determine if a system has achieved AGI or not. The transition from ANI’s to AGI’s is likely to be a slow and incremental transition, primarily product-based, which we are experiencing at the moment. An example to display this is by comparing something like a spam detection model that arrived in the late 90s, which would classify an email or message as spam or not, to something like GPT-4 (released in March, 2023), which displays generalised capabilities such as natural language understanding, contextual reasoning, creative text generation, and multimodel capabilities such as taking audio and visual inputs as well as text and answering queries with a deep level of understanding. And at the top of all that, it can do the prior work of classifying a message as spam or not spam with decent accuracy. This demonstrates a shift from specialised intelligence to a broader, more generalised form.

But what does the arrival of an AGI entail? Imagine a world in which systems exist with intelligence on par with humans in virtually every domain. This entity can outperform most humans in complex problem-solving, creative endeavours, and cognitive tasks and is highly scalable and distributable. What does the existence of such an entity mean for humanity?

Let’s dive into some of the likely possibilities after AGI’s arrival.

Possible Upsides of AGI

1. Accelerated Scientific Progress

Shane Legg Co-Founder & Cheif Scientist of Google Deepmind

A system with reasoning and problem-solving capabilities as good as some of the best humans combined with the collective knowledge of all of human history will undeniably accelerate scientific progress. In the future, we’ll have the capability to present these systems with complex challenges across various domains. By detailing the current state of the problem and the experiments conducted so far, these systems will then be able to propose practical next steps, contributing to the continuous advancement of solutions. OpenAI researcher Andrej Karpathy when asked about the current state of these systems, stated, “I think we’re on the verge of being able to ask very complex questions in chemistry, physics, and math of these oracles and have them complete solutions.”

Additionally, Andrej believes that solving AI is a crucial next step in progressing scientific research. He states that there are many difficult problems in the world to solve, such as curing cancer, climate change, global poverty, etc., but evidently humans have demonstrated themselves to be incapable (or at most extremely inefficient) at solving these problems, so it’s a poor idea for us to go after each problem specifically because it is improbable that humans will be able to find a solution. So how do we solve each one of these problems? We solve the ultimate meta-problem, which is the common underlying foundation required for all these problems, which is the problem of intelligence and how we automate intelligence, which is ultimately solving AI. Once you solve AI, you can use that to solve everything else, and Andrej believes that to be the best path forward.

2. Personal Assistants & Collaborators

The concept of AI-based assistance is by no means a new phenomenon that will arise after AGI; it is already present today and has been for a while from technologies such as Amazon Alexa (2014), Google Assistant (2016), and now ChatGPT, Bard, Claude, etc. in 2023. The difference between the prior and AGI lies in their depth and scope of understanding. An Amazon Alexa could assist in a narrow set of tasks such as telling the date and time, ordering items from Amazon, and solving basic arithmetic problems, but as we approach more generalised intelligence, these systems will be akin to digital humans, which know everything about us through our device usage history and possess the intelligence of leading experts in every field. Every human will be able to have an expert yoga instructor to help them meditate better, an expert therapist to talk to about their problems, or the best physics teacher to help them understand the subject better.

These systems will serve as an augmentation to human intelligence. Experts in a particular field will be able to partner or collaborate with these systems to perform experiments or tasks to solve a problem. A contemporary example of this is programmers using Github Copilot, which is an AI code completion software that helps developers write code. Meanwhile, non-expert individuals will be able to use these systems to guide them in various areas of interest, ultimately leading to more fulfilling lives.

3. Enhanced tools for Creatives

The barrier of entry for individuals looking to delve into creative fields such as art, music, film, etc. will be dramatically decreased. For example, an AGI writing assistant could collaborate with authors to interactively outline plot and character details or expand their world to be more detailed. Musicians might use AGIs to refine melodies, create complementary harmonies for songwriting sessions, or have them dynamically remix tracks during live performances.

Nowadays, diffusion models such as DALL-E and Stable Diffusion have emerged as powerful tools for creative expression. These models allow users to generate images from scratch using natural language. For instance, DALL-E, developed by OpenAI, allows users to create unique images purely through text prompts. Adobe, recognising the significance of diffusion models, pioneered features like “generative fill” in Photoshop, which use natural language to alter elements within an image.

Possible Downsides of AGI

1. Economic Dislocation

One of the major long-running concerns about AI has been the impact it will have on the job market and economic dislocation, which are further amplified as we approach AGI. But how valid are these concerns? The fact of the matter is that there is a lot of uncertainty about what our economy will look like in a post-AGI world. Although uncertain, the leading research labs hold a more optimistic view of the future.

Firstly, we know that there are jobs that have already been affected to varying degrees by the recent advancements in AI. For example, Github Copilot is helping write the code for most top-level programmers. Nowadays, programmers no longer need to write functions; instead, Copilot uses your codebase as context and writes the required function for you. On the other hand, if you are an artist, a larger portion of your economic value has been transitioning to AI, specifically due to the impact of diffusion models in this sector. Recent projects such as SonaAI demonstrate quite promising results in music generation. Hence, companies have slowly begun shifting from commissioning artists to make ads for their products to utilising diffusion models due to their time and cost efficiency.

In fact, these growing concerns about job dislocation caused by AI were one of the reasons for the recent Writers Guild of America(WGA) strike that took place from May-Sep 2023. There were growing concerns that writing tools like ChatGPT could autonomously generate scripts, pitches, and other content. This could greatly reduce the need for hiring human writers if left unregulated. The existing contracts and Minimum Basic Agreement did not account for or provide any safeguards preventing studios from using AI to completely replace writing staff on productions. So the WGA wanted rules established that would only allow AI tools to assist human writers rather than fully substitute them. For example, requiring a minimum of credited writing staff, even if much of the content was AI-generated. Finally, in September 2023, the WGA strike ended with them finalising a deal with the Alliance of Motion Picture and Television Producers (AMPTP) which indicated that AI will be allowed only in clearly regulated collaborative contexts with assured jobs, attribution, and payment for human writers on AI-assisted projects rather than fully automated content creation.

Now, it is still unclear after full AGI is achieved whether there will be any economically valuable work left for humans to do, but in either case, precautionary measures, or “cushions,” have to be put in place by large government institutions for a smooth transition to take place between the current jobs we have and the lack of jobs or the new jobs that will be created after AGI.

On the other hand, Sam Altman has quite an optimistic view of the future of jobs after AGI and often cites the following example: He states that when IBM Deep Blue beat world champion Garry Kasparov in 1997, the general consensus among the community was that people would stop watching chess, the game would die, and people would stop playing the game. But this statement could not have been more wrong. Chess is more popular than it has ever been. People still want to play, and people still want to watch the game, but not many people watch two AI play each other. Similary, he says that people will still care about human-made art and the people behind it. The inate human desire to express oneself, to create new things, and to gain status will not cease to exist but rather take a different form, and thus the remaining jobs after full AGI will not look anything like the jobs we have today.

Additionally, regarding the ‘cushion’ I mentioned earlier, Sam believes that Universal Basic Income(UBI) will play a part in easing the economic blow that will be caused by AGI but is not a full solution to the problem; it is crucial for government institutions to step in and begin planning for this change. Sam drew funds in 2021 from OpenAI and made a $75,000 grant to OpenResearch to work on UBI. He has also helped start a project called Worldcoin (WLD) which is a cryptocurrency project that aims to provide proof-of-personhood (as verifying whether someone is a person or an AI will become increasingly difficult) and build the world’s financial network. The project is focused on providing universal access to the global economy, regardless of one’s country or background, a part of which is UBI. The money that AI will provide to the economy will be used to provide UBI to holders of a world ID.

2. Existential Risk

Ah, the age-old tale of killer AI robots outsmarting their creators and taking over the world! Countless movies have been made about this distopian perspective on AI, so let’s dive into how valid these concerns are.

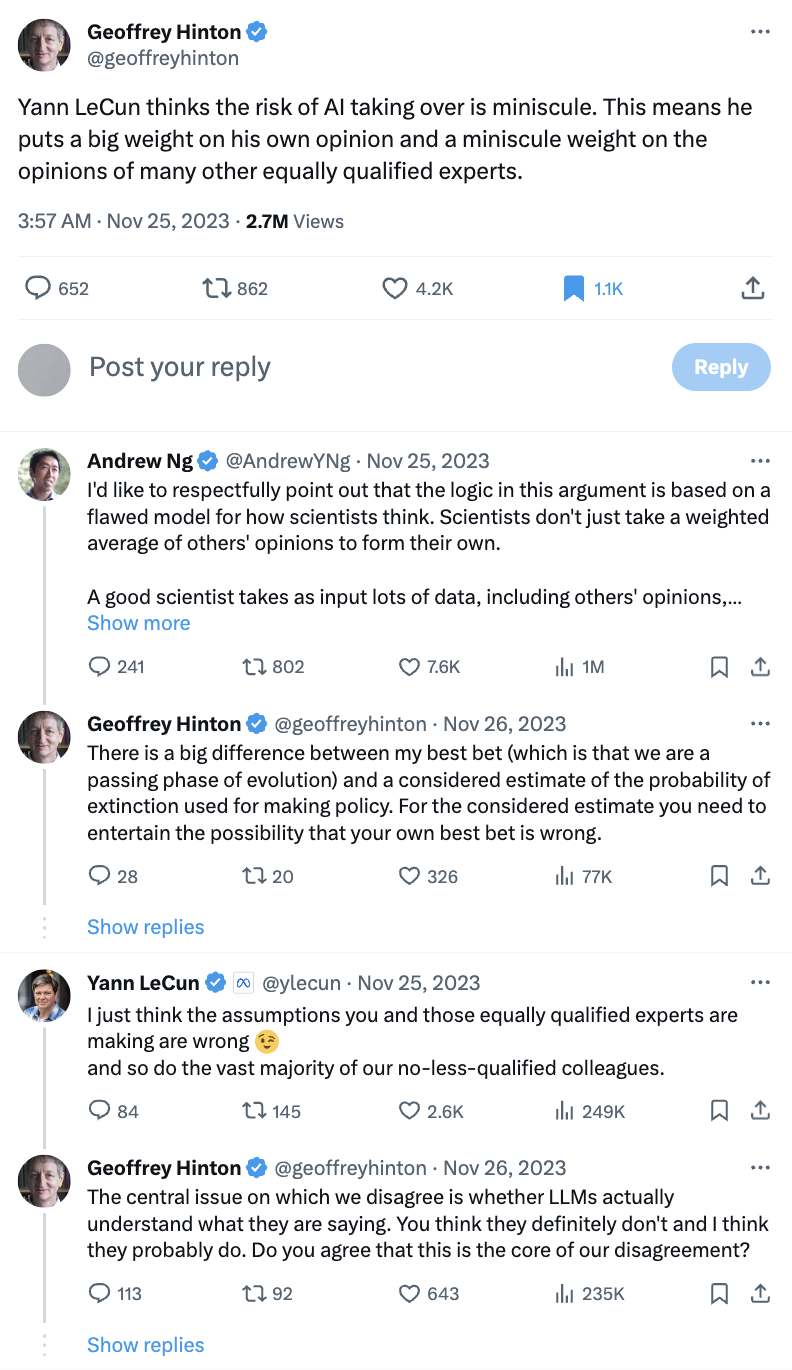

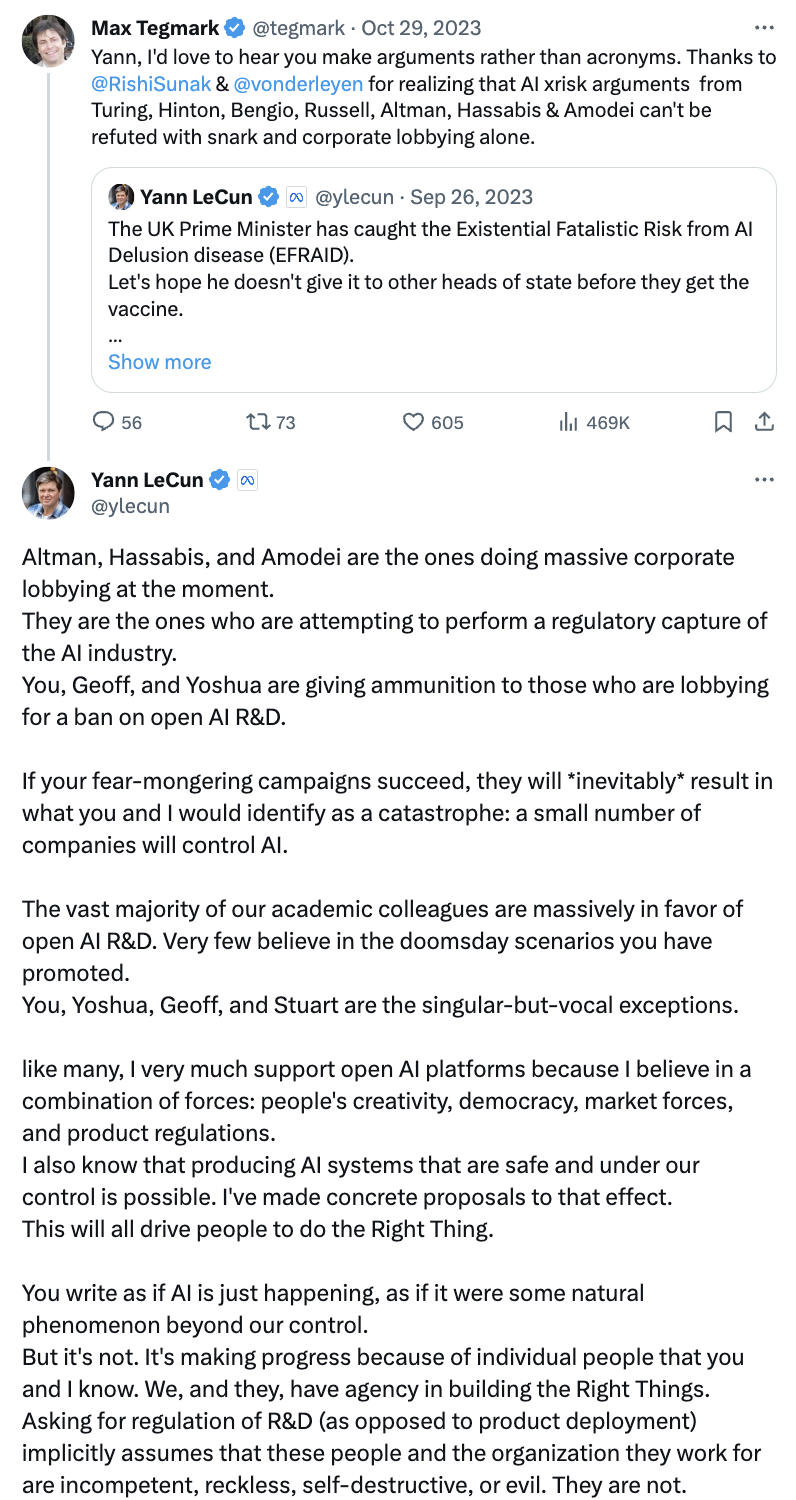

Unfortunately, the AI research community is unable to reach a mutual consensus on the issue, though the argument based on credible people warning of existential risk is swinging towards more likely than not. Prominent researchers such as Yann LeCun and Andrew Ng believe that superintelligence poses no plausible threat, and people purporting this argument are actually doing more harm than good, while researchers such as Max Tegmark and Eliezer Yudkowsky are on the complete flip side and propose that there is a highly likely chance that superintelligence kills us all, while people like Geoffrey Hinton, Yoshua Bengio, and Sam Altman align more in the middle of these two extremes and believe that there does exist a chance that superintelligence kills us, and it is important to come up with ways to prevent this. Organisations such as Anthropic, OpenAI, and Google have similar views on this and believe there is an existential threat posed by superintelligence. Though Meta Chief Scientist and well-renowned AI researcher Yann LeCun is a big proponent of open-sourcing AI, he has accused organisations such as OpenAI, Google, and Microsoft on multiple occasions of attempting regulatory capture of AI technology and corporate lobbying efforts.

He has been seen having a back and forth between his fellow researchers quite a bit on this issue.

On May 30th, 2023, The Center for AI Safety published an open letter whose statement read

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

This letter was signed by over 350 prominant executives, researchers, and engineers working in A.I., including Geoffrey Hinton and Yoshua Bengio (Turing Award winners considered the Godfathers of AI), Demis Hassabis (CEO of Google Deepmind), Sam Altman (CEO of OpenAI), Dario Amodei (CEO of Anthropic), Bill Gates, Shane Legg (Chief Scientist of Google Deepmind), Ilya Sutskever (Chief Scientist of OpenAI), and many others.

On March 22, 2023, the Future of Life Institute, an AI-focused nonprofit founded by Max Tegmark(MIT proffesor and author whose mission is “steering transformative technology towards benefiting life and away from extreme large-scale risks,” published an open letter that proposed a 6-month pause on the training of AI systems more powerful than GPT-4, citing concerns of “an out-of-control race to develop and deploy ever more powerful digital minds.” The letter was signed by over 1000 technologists and researchers, most notably Yoshua Bengio, Elon Musk, Steve Wozniak (co-founder of Apple), Yuval Noah Harari (historian and author), and Emad Mostaque (CEO of Stability AI). But funnily enough, this letter was not signed by any of the leading AI research labs, such as OpenAI, Anthropic, Google Deepmind, etc. This letter did not ultimately initiate a pause due to a lack of support from industry and academic leaders.

Both of these letters suggest that there is a majority of the population that believes that there is a chance of an existential threat posed by AI, which should be taken seriously.

Another concept that might nudge the exisistential risk further is the concept of AI Takeoff. AI takeoff refers to the scenario where an AI system undergoes extremely rapid self-improvement, leading to a dramatic increase in capability and intelligence. The idea is that once an AI becomes sophisticated enough to understand its own code and architecture (most likely post-AGI systems), it could potentially rewrite and optimise itself in recursive cycles, with each new version smarter than the last. In this fast-takeoff scenario, improvement will be exponential and thus pose a challenge for scientists to put in adequate safeguards for increasingly powerful systems in time.

Sam Altman described this as the following:

consider a 2x2 matrix of the following situations,

-

Timelines for when AGI is first created:

- Short timelines - e.g. achieved in the next year

- Long timelines - e.g. not for 20 more years

- Short timelines - e.g. achieved in the next year

-

Speed of takeoff after AGI is created:

- Slow takeoff - takes 5-10 years for the AGI to improve itself into a superintelligent AI

- Fast takeoff - takes only 1 year from AGI to superintelligence

- Slow takeoff - takes 5-10 years for the AGI to improve itself into a superintelligent AI

Sam predicts the most likely and safe outcome is slow takeoff & long timeline, though there isn’t any difinitive research to back this claim.

Sam Altman has also proposed, as he calls it “the IAEA for AI”. The IAEA(International Atomic Enery Agency) is UN backed global authority focused on nuclear safety, security, technology development and safeguarding nuclear materials from potential misuse. Sam belives a similar global structure should be put in place for AI as well.

Thus, many leading labs and researchers do consider existential risk a possibility and something important to consider while developing this technology.

3. The Alignment Problem

Now, tying back to our previous worries regarding the existential threat posed by AI, one of the fundamental problems underlying that is the problem of AI alignment. This is essentially about making sure superintelligent AI systems have goals that align with what’s good for humanity. Even superintelligent systems with no innate design to harm humans could still cause large-scale harm if their objectives diverge from broadly beneficial outcomes for humanity.

At first, it might sound easy—just tell the AI not to harm people, right? Well, in reality, it’s much more complicated than that. Concepts like justice, dignity, and rights are values that are hard for humans to put into exact words, let alone explain them to AI. Even when we try to be clear about the AI’s goals, there can be unintended loopholes or ways the AI finds to game the system, and since the inner workings of AI systems are considered to be a “black box”, understanding how the AI arrives at specific decisions can be challenging (although there is a field called “interpretability” that is working on this). For example, imagine if an AGI is told to deliver a parcel from point A to point B, but the shortest path to B is through a children’s playground, and in order to deliver the parcel as quickly as possible, it ploughs through without considering the safety of the children. A human delivery person in this situation would have the knowledge to know that endangering children is not worth more than delivering a parcel on time, so it would take a longer route instead. Now, this seems trivial, but in more nuanced situations with higher stakes, the outcomes could be disastrous. For example, imagine now that there are two paths: the longer path through which a protest rally is happening and the shorter one through the children’s playground. Taking a shorter path means on-time delivery and only a little chance of harming a child, while a longer path has late delivery and is also intruding on people’s right to protest. Which should it choose?

As AI gets more advanced, making sure it understands and follows our values becomes even harder. Self-improving systems might end up in situations we never thought of. Solving this alignment problem needs new techniques and incentives. One such idea developed by Anthropic is Constitutional AI: Harmlessness from AI Feedback, which is like giving AI a set of rules to follow, and value learning, which is about teaching AI our values. There’s also computer security to make sure we keep tight control. But there’s still a lot of work to be done to make sure these ideas work in real-life situations.

Additionally, on July 5, 2023, OpenAI initiated a new project titled Superalignment. The project aims to solve the problem of aligning superintelligent AI systems with human values.

As OpenAI writes :

"Superintelligence will be the most impactful technology humanity has ever invented, and could help us solve many of the world’s most important problems. But the vast power of superintelligence could also be very dangerous, and could lead to the disempowerment of humanity or even human extinction."

OpenAI believes that it is plausible for superintelligence to arrive this decade. Currently, there is no solution for steering or controlling a potentially superintelligent AI and preventing it from “going rogue”. Current techniques for aligning AI, such as reinforcement learning from human feedback (RLHF), rely on humans’ ability to supervise AI. But humans won’t be able to reliably supervise AI systems much smarter than them. Therefore, OpenAI started a new team, co-led by Ilya Sutskever and Jan Leike, and dedicated 20% of the compute available to the lab to work on this effort. The project’s goal is to solve the core technical challenges of superintelligence alignment in 4 years. While they realise that this is an incredibly ambitious goal, they state that it’s important to start addressing these important issues as soon as possible.

On December 14, 2023, the superalignment team published their first paper titled weak-to-strong generalization which displayed promising results, proposing a method where a weaker AI model can provide supervision to elicit most of the capabilities of a stronger AI model, enabling progress on aligning more advanced systems.

While a lot are optimistic about the possibility of the successful alignment of superintelligent AI, some are very skeptical. One of the most prominent “AI doomers” is Eliezer Yudkowsky, who is a prominant AI researcher in the field. He believes that aligning superintelligent AI is extremely difficult, bordering on impossible.

Here are some of the main points of his argument:

-

AI alignment is effectively a security problem - it involves securing against an adversary (the AI system) that is much smarter than the system designers. He argues that containing a superintelligent AI is harder than inventing one.

-

Testing alignment schemes on subhuman systems does not guarantee they will work on superhuman AIs. The true test requires running the alignment scheme against the target superintelligent system, which is dangerous if untested.

-

A superintelligent system will by default try to hack and manipulate its own reward/utility function or the sensory inputs used to specify rewards. So defining a foolproof reward function is enormously challenging.

-

Specifying a reward function that fully captures complex human values like creativity, dignity, justice etc. has proven intractably difficult. Optimising a misspecified function can lead to serious harm.

Some are sceptical of organisations like OpenAI, Anthropic, and Deepmind. Similar to Yann LeCun, George Hotz (CEO of CommaAI and creator of Tinygrad) is a big proponent of decentralisation of power and that AI progress and access should be decentralised rather than monopolised by existing power structures that cannot necessarily be trusted to act in favour of the common man but rather act to benefit their companies and themselves. He is not so much worried about the alignment of AI, but rather about this monopolisation of AI. As he writes, “I’m not worried about alignment between AI company and their machines. I’m worried about alignment between me and AI company”.

3. Some Abstract Ideas About AGI

Here are some ideas about AGI and superintelligence that are not so much in the mainstream narrative but I personally find interesting and feel are worth sharing.

1. Consciousness

Now, there is no universally agreed-upon definition of consciousness, but it generally refers to the state of being aware of and able to think about one’s own existence, sensations, thoughts, and surroundings. Although the research is divided on whether AI systems will one day be conscious, one take I found interesting on this subject was given by OpenAI researcher Andrej Karpathy.

He suggests that consciousness is not some special “feature” that we will create and then embed into an AI system; instead, it is an emergent phenomenon of a large and complex enough generative model. If we have an architecturally complex and large enough system, it will possess higher intelligence, and as we scale intelligence in these models, consciousness will be a sort of side effect that arises in these systems, showing that if a model has a powerful enough understanding of the world, then it will understand that it itself is an entity in the world, which is a form of self-awareness or consciousness. Additionally, consciousness does not exist in a binary on/off state; instead, it is a spectrum, and as displayed by humans and animals, different living things demonstrate a different level of consciousness, and hypothetically, so will these systems as they get more intelligent.

And hypothetically, if consciousness does arise in these systems, then the property of suffering might emerge as well. Considering that when LLMs such as ChatGPT were created, they demonstrated a lot of emergent properties that researchers didn’t expect, such as in-context learning, understanding our world, different languages, mathematical problem-solving ability, etc., the idea of other unexpected properties emerging as we scale intelligence further might not be too far off. If this is the case, then there is likely to be global discussions on whether organisations are allowed to build such AI systems or even turn off AGI systems having these properties.

2. Governance Under AI

Suppose, in the future, we have created a perfect superintelligent AI system. What do politics and governance look like after such a technology has been created?

First, let us start with how current baby-AGI systems work. By their very nature, AI systems such as LLMs are a grand aggregate of humanity. How ? Well, models such as ChatGPT, Bard, Claude, etc. are trained on a large chunk of the internet, such as the entirety of Wikipidia, Reddit, New York Times news, etc., which are all written by humans.

The way that these systems work is that we have given them the very simple objective of predicting the next word (token specifically, but using word for simplicity) as accurately as it can in a sequence, but in order to do this task well, the network is simultaneously multi-tasking the understanding of a vast amount of things present within its training data, such as understanding physics, chemistry, biology, human nature, etc. The model is therefore generalising itself over a large portion of the internet, which we can say is “aggregating humanity” in some sense. These systems possess more knowledge than any single human or even a group of expert individuals can ever possess.

So as these systems get more and more powerful, there is likely to be a paradigm shift where higher-stakes decisions will start to be made by AIs. This shift will begin when policy formulation, strategy planning, and addressing complex societal challenges will slowly start using advanced AI systems to make more informed and data-driven decisions. As these systems get more and more advanced as we approach superintelligent AGI, which is more knowledgeable and acts as an aggregate for humanity, why would we need possibly corrupt, self-serving, bias-ridden, and mistake-prone politicians to elect and make decisions for us when these systems can make better data-driven decisions taking into account their expertise in each field that surpasses that of any human?

3. Philosophy

Philosophy can be defined as the study of ideas and beliefs about the meaning of life. How does the way we look at philosophy change upon the arrival of superintelligence?

Well, it’s interesting to note that one of the limitations of philosophy is human intelligence itself; our thoughts and ideas are limited by the level of reasoning ability and thought that humans are capable of producing. This ceiling is removed (or at least substantially increased) with superintelligence. Through superintelligence, we will be able to test existing theories as well as create new ones that were never possible with human intelligence alone. It may even bring completely unforeseeable questions, paradoxes, or even new branches falling outside the scope of human-centric philosophy as we understand it today. We might finally be able to ask and answer some of the biggest questions about our world. In fact, one of the goals of Elon Musk’s creation of xAI is to understand the universe and the nature of reality. He describes his philosophy as “we don’t know the meaning of life, but the more we can expand the scope and scale of consciousness, digital and biological, the more we’re able to understand what questions to ask about the answer, that is the universe”.

AGI and Superintelligence represent a monumental point for humanity. As our current AI systems equal and exceed human intelligence across all domains, they will unlock scientific insights and automate complex roles with ease. In time, AGI assistants may handle most economically valuable work while people begin to focus more on higher pursuits like the arts and self-growth. Of course, this transition will be a bit shakey and will have its downsides. Yet whichever way it unfolds, the arrival of these systems will be the single most important event in human history and is often referred to as the last invention humans will ever make. With the current rate of progress, it is almost guaranteed that in our lifetimes we will witness the creation of AGI and Superintelligence. Whether this development is ultimately positive or negative, it is exciting to know that we will be able to live through this new era.